Technology used to realise construction 3

Several technologies have been incorporated and developed as part of the RIMM project..

Sensors

Using wireless, wearable technology, a set of sensors have been developed

to allow the performer to control sound generation, audio mixing and spatialisation,

real-time signal processing and graphics. These sensors include actuators

controlled by toe pressure, accelerometers, infra-red video tracking and a

digital compass. This system was designed to capture performance gestures

without interfering with the performers technique and posture following a

video analysis of the performers movements. These sensors were interfaced

via a PIC microcontroller to a customised radio transmission system to provide

wireless RS-232 communication with the main computer systems.

A Real-Time

Graphics Engine

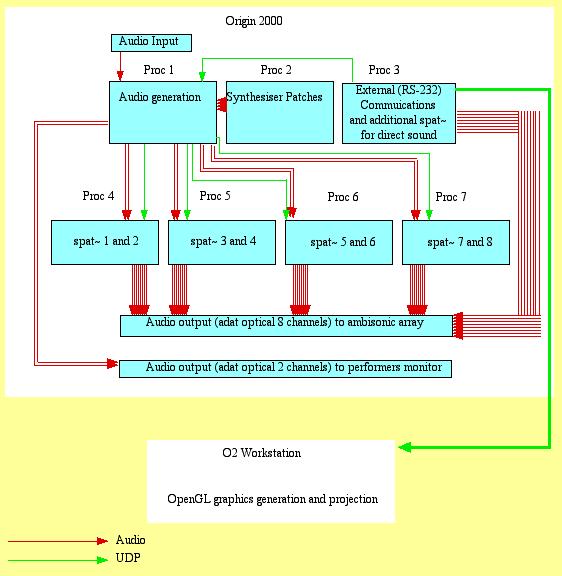

Written using the OpenGL Application Programming Interface, this engine runs

on a Silicon Graphics O2 workstation and communicates with the rest of the

system via UDP network sockets.

A Multiprocessor

Configuration of jMax for Real-Time Multiple Media Content Generation using

Surround Sound

A series of intercommunicating jMax patches have been developed to perform

in real-time on a Silicon Graphics Origin 2000 computer. These patches include

9 Spat.~room simulators working together to localise multipe sound matierials.

The intensive computation of real-time room simulation required the use of

multiple R10000 processors. Multiple 8 channel audio streams and control information

is communicated between the instances of jMax using UNIX shared memory devices

and UDP sockets to take advantage of the high inter-processor bandwidth of

the Origin 2000. The final soundfield is decoded using an 8 channel cubic

decoder (customised by IRCAM for the RIMM project) and reproduced over an

8 speaker ambisonic array.

construction 3 system overview

© University of York, York, UK